LTI Show and tell on assessment with Technology, 11 November 2014

LTI recently held a show and tell on assessment with technology with colleagues from LSE, UCL and Westminster. The event was well attended and provided an opportunity to find out the varying ways that technology is being used in to innovate assessment.

LTI recently held a show and tell on assessment with technology with colleagues from LSE, UCL and Westminster. The event was well attended and provided an opportunity to find out the varying ways that technology is being used in to innovate assessment.

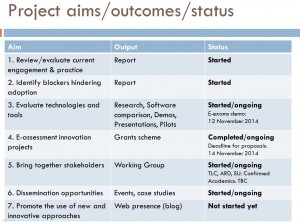

The show and tell event is part of the work that is being done by LTI to promote assessment with technology at LSE. The project aims and outcomes outlined by LTI learning technologists Athina Chatzigavriil and Kris Roger can be seen here. If you are interested in being involved in the working group on e-assessment or have examples of e-submission, e-marking or e-feedback and e-return then please get in touch by emailing lti.support@lse.ac.uk

A lecture capture recording of the event (slides and audio) is now available here (LSE login required) or you can read a brief summary of the presentations below.

Alternatives to examinations

Professor George Gaskell started off the event with a brief outline of the changes that are taking place in LSE100, the compulsory course for Undergraduates at LSE. The LSE100 Director explained that the course team are currently investigating alternatives to exams. Using the learning outcomes of the course as the basis for assessment they have been developing a portfolio of activities that will allow students to demonstrate their appreciation of apply social scientific methods, concepts and theories to real world problems. Assessments will have to allow for ‘exit velocity’ and let students to take risks in their first year and allow for the progress of learners over their two years at LSE, while also preventing strategic planning by requiring all components to be completed. The process is still in the developing stages so watch this space for updates.

Professor George Gaskell started off the event with a brief outline of the changes that are taking place in LSE100, the compulsory course for Undergraduates at LSE. The LSE100 Director explained that the course team are currently investigating alternatives to exams. Using the learning outcomes of the course as the basis for assessment they have been developing a portfolio of activities that will allow students to demonstrate their appreciation of apply social scientific methods, concepts and theories to real world problems. Assessments will have to allow for ‘exit velocity’ and let students to take risks in their first year and allow for the progress of learners over their two years at LSE, while also preventing strategic planning by requiring all components to be completed. The process is still in the developing stages so watch this space for updates.

Peer assessment

Dr Irini Papanicolas, from Social Policy gave the second presentation on her work with Steve Bond in LTI on peer assessment. Dr Papanicolas discussed how she changed assessment on the course SA4D4 from 100% exam, to 50% exam and 50% presentation. She used ‘WebPA’ to enable students to rate their peers’ presentations using the course mark frame. Although peer assessment was an optional part of the assessment all the groups volunteered feedback and there was a positive response to the process with it creating discussion within the groups on the assessment criteria.

Dr Irini Papanicolas, from Social Policy gave the second presentation on her work with Steve Bond in LTI on peer assessment. Dr Papanicolas discussed how she changed assessment on the course SA4D4 from 100% exam, to 50% exam and 50% presentation. She used ‘WebPA’ to enable students to rate their peers’ presentations using the course mark frame. Although peer assessment was an optional part of the assessment all the groups volunteered feedback and there was a positive response to the process with it creating discussion within the groups on the assessment criteria.

Dr Papanicolas will be using ‘TeamMates’ for this year as it will allow students to not only rate their own groups’ presentation but the individuals contributions within the group.

From peer assessment to peer teaching and learning….

Kevin Tang then reported how ‘Peerwise’ has been used at UCL. Kevin has been working with Sam Green & Stefanie Anyadi in the department of Linguistics to use the platform with 50 undergraduate and 50 postgraduate students. PeerWise allows students to create, answer and discuss questions. Students can rate feedback and are scored on their own contributions, at UCL these contributions are then worth a small percentage of their summative mark for the course.

Research into using the interface indicated that it was important to provide support for students to ‘think like an examiner’ with example questions and training on giving constructive feedback. Academic staff attitudes also played a crucial role in student engagement along with setting regular activities and deadlines.

As most examiners will know it is quite hard to create good questions so UCL asked students to devise questions in groups and found that the questions improved over time with the students in mixed ability groups appearing to benefit the most. The platform provided a space for interaction as students provided detailed feedback for each other which was then used to work on future questions and students were still using the system leading up to the exam for revision purposes.

Games and assessment in Law

Dr Vassiliki Bouki, Principal Lecturer, University of Westminster talked about the use of games in assessment. Dr Bouki demonstrated the ‘law of murder game’ which was developed in ‘Articulate storyline’ and was used as an alternative to coursework for a second year criminal law module. The game was used to demonstrate a real life scenario and assess critical thinking and allowed students to experience role playing to think like a lawyer. Students are given two hours to complete several small tasks in an open book environment. The game is currently in use so data and feedback from students will be available later in the year.

Word processed timed assessments and online feedback

Dr Sunil Kumar, Lecturer in Social Policy & Dean of Graduate Studies, talked about his experiences over three years on the course ‘urbanisation and social policy’. Concerned about how much students were actually learning with the traditional model of examinations, Dr Kumar introduced a 2 hour online formative assessment into his course. Students typed up their answers to short answer and long answer questions in examination style conditions. Dr Kumar was then able to read and mark submissions on his iPad and then upload the anonymised assessments with annotated feedback for all students to see on Moodle. The formative assessments have had 100% attendance with students being able to then learn from other students submissions, encouraging them to review topics they have not yet covered in preparation for the summative examination.

Dr Sunil Kumar, Lecturer in Social Policy & Dean of Graduate Studies, talked about his experiences over three years on the course ‘urbanisation and social policy’. Concerned about how much students were actually learning with the traditional model of examinations, Dr Kumar introduced a 2 hour online formative assessment into his course. Students typed up their answers to short answer and long answer questions in examination style conditions. Dr Kumar was then able to read and mark submissions on his iPad and then upload the anonymised assessments with annotated feedback for all students to see on Moodle. The formative assessments have had 100% attendance with students being able to then learn from other students submissions, encouraging them to review topics they have not yet covered in preparation for the summative examination.

More information about the project can be found on our blog post.